Dota 2 Tournament Simulator

A tournament simulator with match prediction capabilities powered by AI.

A full-stack web application that simulates an 8-team, double-elimination Dota 2 tournament. Match outcomes are predicted by a neural network trained on historical data using multiple advanced rating systems.

This project provides an end-to-end pipeline for predicting Dota 2 match outcomes. It includes scripts to:

- Gather Data: Fetch thousands of professional match results from the OpenDota API.

- Calculate Historical Ratings: Process matches chronologically to compute multiple historical skill ratings for each team.

- Train a Model: Train a PyTorch neural network on the generated dataset to predict win probabilities.

- Simulate a Tournament: A Flask-based web application provides a Liquidpedia-style bracket interface where users can select 8 teams and run a full tournament simulation based on the model’s predictions.

Features

- Automated Data Pipeline: A bash script automates the entire setup process.

- Historical Rating Engine: Implements Elo (with variable K-factors) and a custom Glicko-2 engine from scratch.

- Neural Network Prediction: Uses a PyTorch model to predict match outcomes based on rating differences.

- Interactive Web Interface: A sleek, Liquipedia-inspired tournament bracket built with Flask, HTML, CSS, and JavaScript.

- Full Tournament Simulation: Simulates a complete 8-team, double-elimination bracket, including upper and lower brackets, to determine a champion.

Understanding the Rating Systems

A key feature of this project is its use of robust rating systems to quantify team skill. Although I did an in-depth explanation in this post, here’s a brief description on how they work.

The Elo Rating System

The Elo system is the foundation of many competitive rating systems, originally designed for chess. Its goal is to calculate the relative skill level of players in a zero-sum game.

- Core Concept: Each team has a rating number. When two teams play, the winner takes points from the loser. The number of points exchanged depends on the difference in their ratings.

- Expected Outcome: If a high-rated team beats a low-rated team, only a few points are exchanged, as this was the expected outcome. However, if the low-rated team causes an upset, it will gain a large number of points.

- The K-Factor: The maximum number of points that can be exchanged is determined by a value called the K-factor.

- A low K-factor (like

k=32, used in this project) leads to smaller, more stable rating changes. - A high K-factor (like

k=64) makes the ratings more volatile and responsive to recent results. This project calculates bothElo32andElo64to feed the model a richer set of features.

- A low K-factor (like

The Glicko-2 Rating System

Developed by Professor Mark Glickman, Glicko-2 is a significant improvement upon the Elo system because it introduces the concept of rating uncertainty. It acknowledges that we can be more or less confident in a team’s rating.

Glicko-2 tracks three values for each team:

- Rating (μ): This is the skill rating, similar to Elo. It’s the system’s best guess of a team’s strength.

- Rating Deviation (RD or φ): This is the measure of uncertainty. An RD is like a margin of error: a low RD means we are very confident in the team’s rating (e.g., a veteran team that plays often), while a high RD means the rating is less reliable (e.g., a new team or a team that hasn’t played in a long time). A team with a high RD will see its rating change much more drastically after a match.

- Rating Volatility (σ): This measures the consistency of a team’s performance over time. A team with surprisingly erratic results (e.g., beating strong teams but losing to weak ones) will have a high volatility, which causes their RD to increase more quickly.

In essence, Glicko-2 provides a much more nuanced view of skill by not only estimating a team’s strength but also how reliable that estimation is.

The AI used

The train.py script is the core of the machine learning pipeline for this project. Its primary purpose is to take the structured, historical match data generated by the data collection script and use it to train a neural network capable of predicting match outcomes. Upon successful training, it produces three critical files:

-

dota2_predictor.pt: A serialized TorchScript model file containing the trained neural network, ready for deployment. -

scaler.json: A file containing the mean and scale values used to normalize the input features. This is essential to ensure that live data is processed in the same way as the training data. -

team_data.json: A snapshot of the most recent ratings for every team, which is loaded by the web application to make predictions for new matchups.

Inputs and Feature Engineering

The model does not use raw rating numbers directly. Instead, it relies on carefully engineered features that represent the relative strength and certainty of the two competing teams. The five inputs to the model are:

-

elo32_diff&elo64_diff: These are the differences between the Radiant and Dire teams’ Elo ratings, calculated with K-factors of 32 and 64, respectively.- Why they are used: The raw rating of a team is less important than its rating relative to its opponent. A large positive difference strongly suggests a win for the Radiant team, making this the most powerful predictive signal. Using two different K-factors provides the model with both a stable (k=32) and a more volatile (k=64) view of the teams’ recent performance.

-

glicko_mu_diff: The difference between the Radiant and Dire teams’ Glicko-2 ratings (μ).- Why it is used: Similar to the Elo difference, this provides another measure of relative skill, but one that is influenced by the more sophisticated Glicko-2 update rules.

-

radiant_rd&dire_rd: The raw Rating Deviation (RD or φ) for the Radiant and Dire teams from the Glicko-2 system.- Why they are used: The RD is a measure of uncertainty. A high RD indicates that a team’s rating is less reliable (e.g., they are a new team or haven’t played in a while). By feeding the RDs directly into the model, it can learn to temper its predictions. For example, it might learn that a large rating difference is less meaningful if the favored team also has a very high RD.

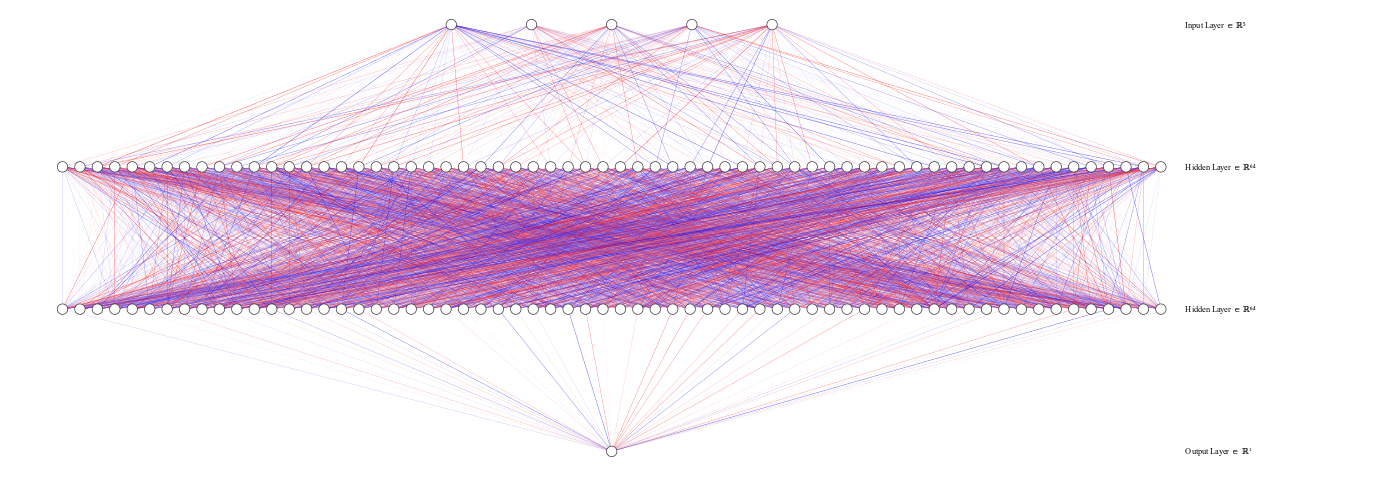

Neural Network Architecture

The script defines a simple but effective feed-forward neural network, also known as a Multi-Layer Perceptron (MLP), with the following structure:

- Input Layer: A linear layer that accepts the 5 input features described above.

- Hidden Layers: Two linear hidden layers, each containing 64 neurons. These layers use the ReLU (Rectified Linear Unit) activation function. The hidden layers allow the model to learn complex, non-linear relationships between the input features and the match outcome.

- Output Layer: A single linear layer with 1 neuron. This neuron’s output is passed through a Sigmoid activation function. The Sigmoid function squashes the output to a value between 0 and 1, which can be directly interpreted as the predicted win probability for the Radiant team.

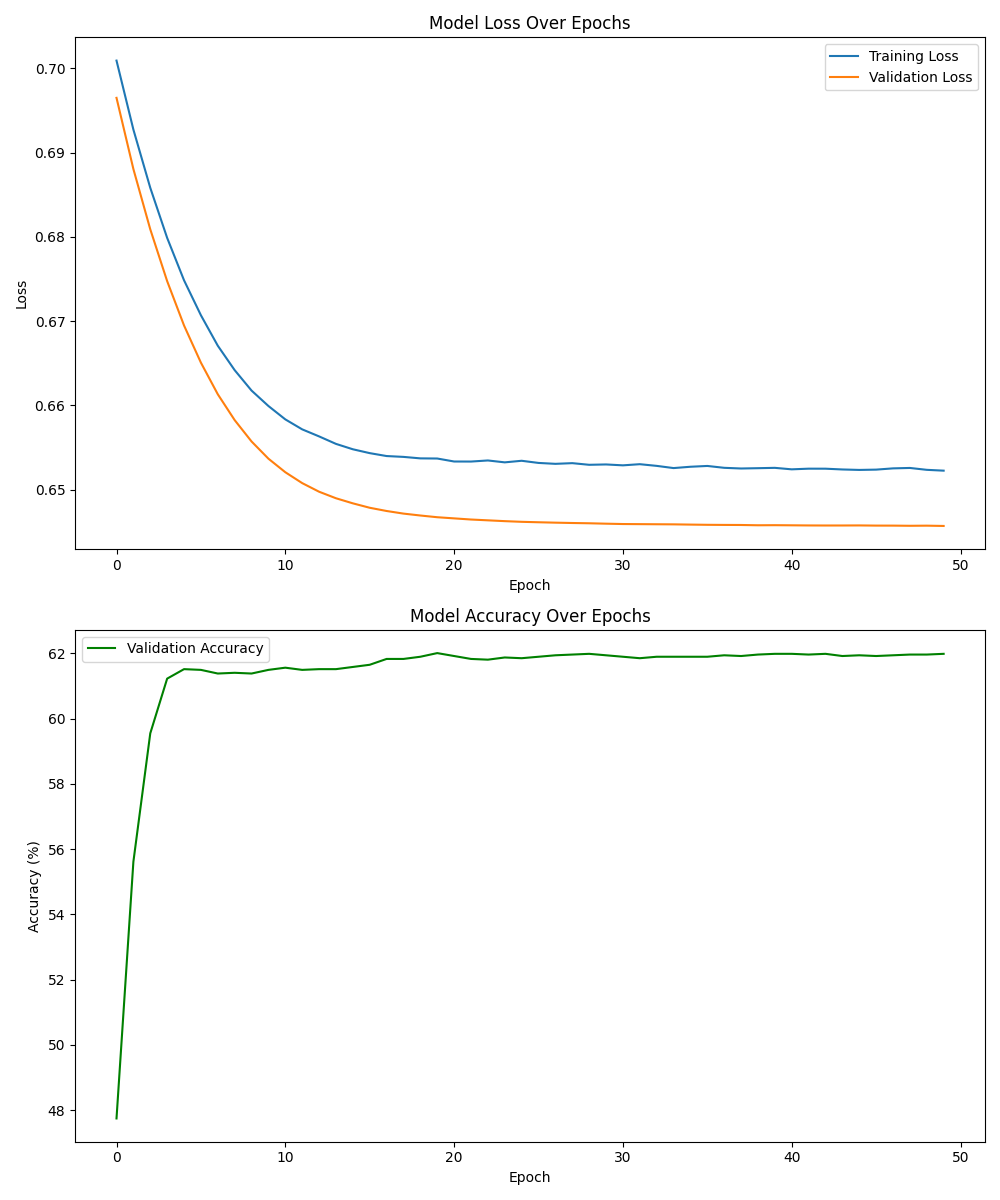

Training Strategy

The model is trained using a standard and robust supervised learning strategy:

- Data Splitting: The dataset is split into a training set (80%) and a validation set (20%). The model learns from the training set, while the validation set is used as unseen data to evaluate its performance and check for overfitting after each epoch.

- Feature Scaling: Before training, the input features are normalized using

StandardScaler. This process standardizes each feature to have a mean of 0 and a standard deviation of 1. This is crucial for neural networks, as it ensures that all features contribute equally to the learning process, preventing features with larger numeric ranges (like Elo differences) from dominating the calculations. - Loss Function: The model uses Binary Cross-Entropy Loss (

BCELoss). This is the standard loss function for binary classification problems where the output is a probability. It quantifies how far the model’s predicted probability is from the actual outcome (0 for a loss, 1 for a win). - Optimizer: The Adam optimizer is used to update the model’s weights. Adam is an efficient and popular algorithm that adapts the learning rate for each parameter, generally leading to faster and more stable convergence.

- Batching: The training data is fed to the model in batches of 64 using PyTorch’s

DataLoader. This improves computational efficiency and provides a stable gradient estimate during weight updates. - Monitoring: The script tracks and prints the training loss, validation loss, and validation accuracy after each epoch. This data is then used to generate plots, providing a clear visual representation of how well the model learned over time.

Difficulties in Dota 2 Prediction

Predicting Dota 2 matches is an inherently difficult task due to several factors that cannot be captured by rating systems alone:

- The Meta: The game is constantly updated with patches that change hero abilities, items, and game mechanics. A team’s high rating might be based on a strategy that is no longer viable in the current “meta,” making historical data less predictive.

- The Drafting Phase: A significant portion of the strategy occurs during the pre-game hero draft. A superior draft can often overcome a skill or rating disadvantage. This model makes its prediction before the draft is known.

- The Human Factor: Player form, health, mentality, and team synergy are critical variables that are not reflected in a mathematical rating. A team might be “on a hot streak” or suffering from burnout.

- Data Scarcity: For new teams or teams with recent roster changes, their rating is highly uncertain (high RD). While the model can see this uncertainty, predicting their performance against established teams is still challenging.

Running the app

- Link to the app: dota2.lesaf.cc

- Link to the repo: github.com